As students interact with learning environments, they are constantly creating data through these interactions. Learning analytics is a field of education research and practice that involves using these data to make decisions that further enhance learning and the environments in which it occurs.

The Gwenna Moss Centre for Teaching and Learning (GMCTL), Information and Communications Technology (ICT), and other key stakeholders have collaborated to build principles and processes that enable us to undertake learning analytics projects with departments, schools, and colleges, all with due consideration of privacy and ethics.

Which questions can learning analytics answer?

Though learning analytics questions can take many forms, the following questions are a small glimpse into what is possible at a program level. If you have a different question in mind, please contact us to discuss whether learning analytics could lead to the answers you seek.

- Has our curriculum change result had the impact we intended?

- Which flexible learning practices are most effective for creating equitable learning opportunities in our programs?

- Is there a particular program-level outcome with which students struggle disproportionately across our program?

Ultimately, a question in learning analytics should enable someone to make a change to improve learning, whether that’s academic leaders, individual instructors, or the students themselves. For example, depending on what is found with the question above, we may choose to further tweak a recently changed curriculum, build workshops to help faculty with helpful flexible learning activities, or investigate why students are struggling on a specific program learning outcome.

Existing Data and Tools

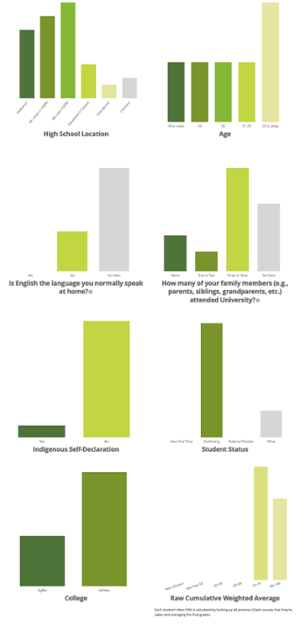

Know Your Students visualizes demographic data, but at the program level rather than the course level. It is intended for curriculum and program planning. It allows authorized users to view and drill down into data across a variety of variables, including student demographics, course, term, section, program, college enrolled, grade distribution, and more. Access to this dashboard requires permission of the Dean, Associate-Dean, or Vice-Dean of your College.

Questions this answers

- What is the average grade (cumulative CWA) of students taking certain courses(s) in my department and who do NOT usually speak English at home?

- What are the characteristics (course load, International/domestic status, first in family, program enrolled, etc.) of students that are taking certain courses(s) in my program and have achieved final grades over 85% in those courses?

- What are the degree programs that students are enrolled in who are taking certain course(s) in my department?

Strengths

- Well organized graphics allow you to easily visualize a lot of data all at once for many students and courses.

- Great place to look for concerning differences between student groups that can then be investigated more thoroughly elsewhere.

Limitations

- Details can be lost in aggregation, so be careful not to read too deeply into what you see.

- Useful at the program level, not intended for use by individual instructors (see Know Your Class instead).

Questions this answers

- Where might there be opportunities to improve student learning by offering courses in a different modality?

- Over time, are we becoming more or less flexible as a unit, with respect to the learning environments we offer?

- Dashboard makes sense of complex enrollment data, making it much easier to read and understand.

- A variety of views and displays enables these data to provide a wide range of insights about instructional modes.

- The best action to take is highly contextual and not immediately obvious, so this dashboard may lead to more questions than answers.

- Lacks context beyond simple enrollment numbers, such as student performance and information about withdrawals.

Questions this answers

- What barriers to success are students facing in our courses?

- Are there courses with disproportionately large numbers of exam accommodation requests?

- Relatively strong data validity, considering all official requests are tracked and included in the data.

- Data are not associated with individuals, allowing exam accommodations to be discussed while maintaining student privacy.

- Only official exam accommodation requests are tracked; there are no other data about other accommodations.

- There is no way to look at the attributes of the exams themselves, so dashboard data will need to be contextualized with unit-specific knowledge to know the implications.

Know Your Class gives instructors an “at a glance” view of the demographic compositions of students in their courses. This allows instructors to build the learning environment specifically for their current cohort of students. For example, if an instructor is teaching a first-year course with many students who have no family members who attended university, that instructor can provide additional explanations and links to resources as those students are less likely to have family members who know about and would encourage students to seek out the supports on their own. It is accessed through Canvas.

Questions this answers

- Beyond visible manifestations of diversity, what else makes my class unique?

- Am I making assumptions in my teaching that may disadvantage a certain group of students?

- What special supports or assistance should I be offering to my students, based on what I see in the infographic, to help them succeed?

Strengths

- Wide variety of demographic attributes (see list here) give a big picture view of who is in your class; allows instructors some insight into aspects of diversity that may not be as visible.

- Offer supports and learning activities that reflect your learners – for example, if you have students from a variety of colleges taking your course as an elective then it may be helpful to their learning if you allow more choice and flexibility in assessments to relate the course context to their major.

- See also: Strategies on how to effectively use the Know Your Class infographic.

Limitations

- Intersectional identities are not shown; you will not know how many of your students have identities that overlap between the different attributes.

- Cannot be used in courses with fewer than 10 students due to privacy of sensitive personal student data.

- It is not always immediately clear how to apply this knowledge to your teaching; consider booking a consultation by emailing gmctl@usask.ca for advice on how you could apply this information.

Built into Canvas, our centrally supported learning management system, is a feature called New Analytics – one of the more accessible and powerful learning analytics tools we currently have. In it, instructors can analyze their class as a whole, individual students, and individual learning materials to make whatever adjustments are helpful to improve the learning environment for everyone.

Questions this answers

- Are my students using the learning materials as intended? Are structural changes necessary?

- Which students would benefit most from outreach and support from me to be more successful in my current course?

Strengths

- Act quickly and effectively based on the data you see – for example, see students who haven’t logged in, page views, message students who haven’t yet turned in an assignment, or ones who may be getting somewhat lower grades to offer additional support.

- The only tool we have that allows you to monitor student interactions with learning materials (i.e. views and interactions) to assess whether your learning materials might need promotion or refinement.

Limitations

- Data is limited to Canvas, meaning it is necessary to consider other avenues of interacting with course materials before acting on the data in New Analytics.

- Grades features require properly setting up the relative weighting of assessments in the Assignments area of Canvas in order to work properly.

SLEQ is our centrally supported student course feedback tool. Reports are issued to instructors and academic leaders on a per-course basis. In addition, there is an archive of historical reports available to view feedback across several academic terms, giving insights into trends over time and progression towards specific teaching and learning goals.

Strengths

- College and departments can create their own questions to give insight into whatever different aspects of the learning environment are uniquely important to them.

- The frequency and granularity of the questionnaires allow instructors to monitor their progress towards their specific teaching and learning goals, to identify aspects of the course that could benefit from extra attention.

- Mid-course SLEQ gives instructors an opportunity to adjust courses in progress to ensure that the learning experiences for their current students are as effective as can be.

Limitations

- Low response rates can adversely affect the validity of the data; instructors are encouraged to take actions to encourage their students to respond to the questionnaire to ensure it is as useful as possible.

- Reports are static, meaning that viewers cannot drill deeper into the report for more insights beyond what is shown.

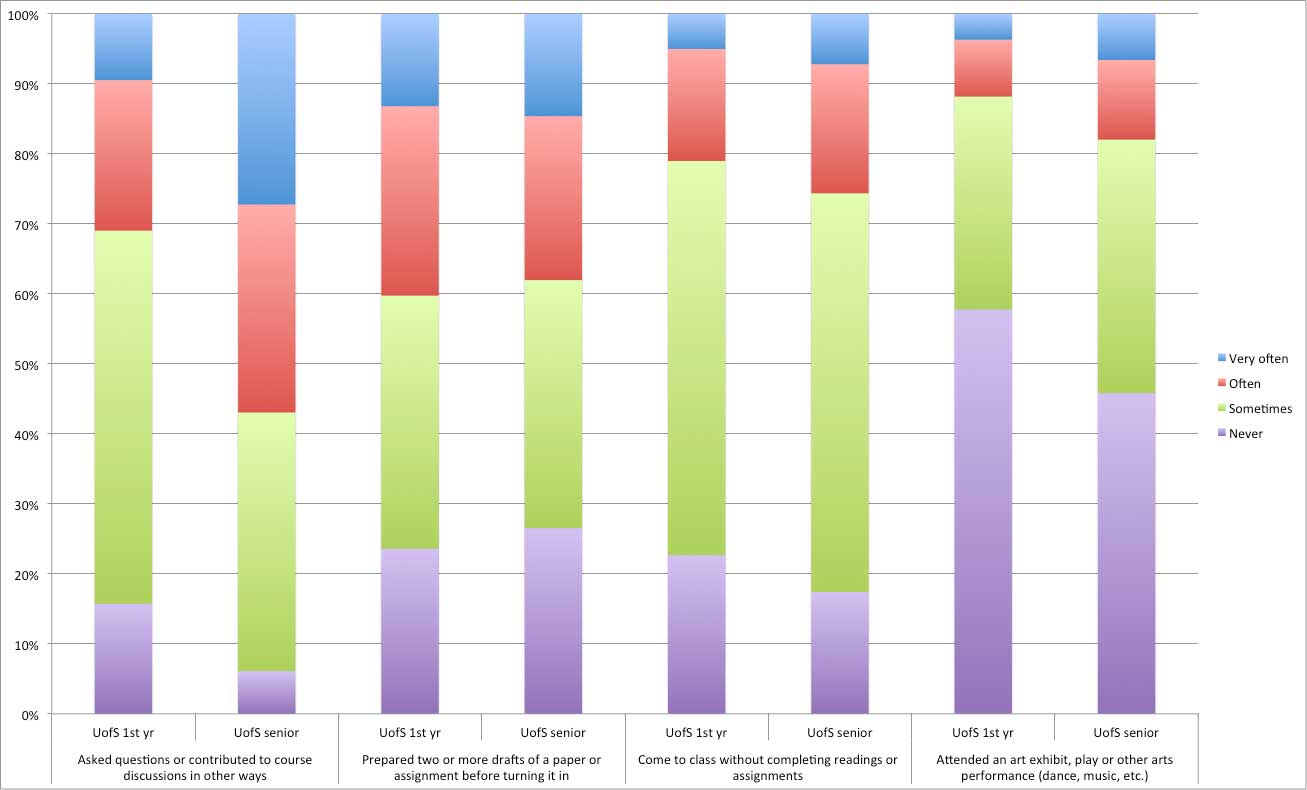

Every three years the University of Saskatchewan participates in the National Survey of Student Engagement (NSSE). NSSE is a standard survey sent to students at several universities across Canada, and is a helpful tool in learning analytics because it is the only one with data that extends beyond USask. College-specific NSSE data is particularly useful to units planning program revisions or teaching innovations, or preparing to complete an Academic Program Review self-study.

Questions this answers

- How engaged are our students with their studies as a whole?

- How does the experience we offer compare within and beyond USask, in first year and in upper years?

- What actions could we take for highest impact on student engagement, in first year and in upper years?

Strengths

- Comprehensive survey that can paint a vivid picture of the student experience in your college.

- Comparisons to other universities are given, acting as a benchmark for comparison.

- Helpful to compare the first-year experience to the experiences of students in upper years.

Limitations

- Due to the standardized nature of the survey, some included items may be less relevant to our USask context or may be missing items we would find relevant.

- Frequency of our NSSE (once every 3 years) means that other tools should be used to track and assess change over smaller time scales.

In March 2022 and May 2024, the Office of Teaching and Learning with support from the Gwenna Moss Centre ran a Teaching Practices Survey to learn about instructional practices used by instructors, instructor beliefs about teaching, and their perceptions of the teaching climate. For academic units with high enough response rates, unit specific reports were written and shared with leaders.

Questions this answers

- How well do practices reported by instructors align with their beliefs about teaching and learning?

- Which specific practices are instructors reporting they use in their courses?

- What enablers and barriers exist at USask, with respect to teaching enhancement?

Strengths

- Hear information directly from instructors for an in-depth look at the instructor perspective on teaching and learning.

- Identify gaps that exist between beliefs and practices, to adjust alignment of faculty support based on existing beliefs and practices.

- Unit-specific reports enable units to compare their instructional practices to USask overall results, to help them identify areas for unit-led teaching enhancement efforts.

Limitations

- Due to the nature of the survey, we are only able to run it once every few years, so the data is not quite as dynamic as other sources.

- TPS 2022 was run during March 2022 and asked instructors to answer thinking of courses they taught in the past 2 years, meaning that many of the reported practices were for courses taught during the COVID-19 pandemic period.

Learning Analytics Projects

When faced with new learning analytics questions, GMCTL and ICT will create a project to assist academic units in answering these questions and to enact changes to improve learning based on these findings. A general process for these projects is outlined below.

In this step, we check for ethics and privacy, and whether the request and use case align with USask’s Learning Analytics Principles. This is based on an initial question or curiosity.

In this step, we define our project team and the estimate the work required for the project. There must be a sufficient estimated impact given the resource cost.

With a better idea of project scope and limitations, by the time we are in step 3, we are ready to further refine and define the question(s) and goals of the project. For example, when we say we want to measure student achievement of learning outcomes, what exactly would we accept as evidence of this achievement? Is there data that measures it?

This stage includes data collection (if it does not already exist) and iterative development and refinement, building towards the final product. We begin with creating something that aligns with what was agreed upon in Step 3, seek feedback from the end users, then make changes. This is iterated until we have the best possible product to release.

Once the technical work is completed, we create a support plan to ensure everyone is aware of the tool and how to use it, including any known caveats or limitations. Once the supports are created, we put the tool in the hands of educators and/or students.

If one group on campus finds a certain learning analytics project worthwhile, chances are others will find it useful too. For this reason, we ask whether the outputs can be generalized for a broader audience, then generalize them accordingly such that everyone can benefit from the project.

In alignment with Principle #8 (see section below), this step is for the project team to reconnect with the end users after some time to understand how the tools have been used. We will discuss if there are any changes or supports needed for the tools to work better in learning environments.

This is intended to be a step in the process that is never truly “complete” as long as the tools are in use as needs and circumstances evolve.

Learning Analytics Principles

To ensure learning analytics are used appropriately, we adhere to 9 principles in our learning analytics work. Download the document below to learn about each of these principles.

- Compliance

- Individuality

- Consent

- Ethical Data Use

- Validation

- Benevolence

- Biases & Limitations

- Duty to Act

- Purpose & Intent

Student Data and Privacy

Student data are securely stored in the University Data Warehouse. Student data may be created by faculty, instructional staff, advisors, administrators, administrative and instructional systems, and by students themselves. Student data can include academic performance data, advising data, survey data, admissions and demographic data as well as activity data associated with university academic and administrative services (such as learning management systems, student response systems, network services, and card swipe systems). In addition, student data may include derived interpretations based on statistical models and patterns. Student data may be used for learning analytics with permission of the University Data Steward.

Student data for learning analytics does not include contents of email messages or other personal communications, discussion forum or social media postings, notes or written materials for which the student could claim copyright. Data of this type will not be used for learning analytics work without expressed written consent of the student.

Instructors in courses are not privy to their students' personal or demographic data, nor to their students' academic history outside the course or predictions of success within the course unless permission is given by the student. Instructors are not to discuss or disclose personal or academic information about students to other students or instructors. As such, instructors are limited in the scope of learning analytics work that they can do with their own students.

Academic advisors are granted special access to personal, demographic and academic history data about students, and they follow a strict code of conduct to ensure that students are protected.

Get support

For support or a consultation on this topic, reach out to the team at the Gwenna Moss Centre for Teaching and Learning.