SLEQ

Student Learning Experience Questionnaire

The Student Learning Experience Questionnaire (SLEQ) is USask’s University Council approved tool for collecting course feedback from students. It includes both end-of-course feedback, and midcourse feedback partway through the term.

Positioning of SLEQ

SLEQ’s design is informed by current research on student course feedback, best practices in higher education, wide consultation with faculty across campus, and validation work with students. We recognize students as key stakeholders in teaching and learning but SLEQ designed with an acknowledgement of the limitations of their perspectives.

- Students only ever have a complete window into their own learning experience, so all core questions are framed to only ask about what they personally experienced.

- Students may interpret rating scales differently, so we cannot be certain about interrater reliability; it is their respective perspective and not a direct evaluation of teaching.

- SLEQ feedback should be considered alongside other sources of evidence of teaching effectiveness, including peer reviews of teaching, self-assessments, and/or learner data.

Structural Features of SLEQ

There are many advantages to using SLEQ including:

- A cascading survey design allowing for college, department, and instructor designed questions

- Term-adjustable flexibility to handle complex and diverse course structures including questions specific to particular types of courses (labs, seminars, tutorials, etc.)

- High quality, actionable feedback due to a redesigned and validated set of USask Core questions focused on enhancing teaching and learning as opposed to student satisfaction

- Mobile access for survey completion

- Formative mid-course surveys

- Department/College configuration of course-level survey dates

- Customizable reporting

- Historical aggregate reporting showing institutional, college, and department norms as well as trends down to the course/instructor over each term

SLEQ includes both a mid-course and end-of-course survey. These are compared in the following table. Mid-course SLEQ is intentionally much shorter and simpler.

| Mid-course SLEQ (optional) | End-of-course SLEQ | |

|---|---|---|

| Validated, USask-wide closed-ended questions | 3 | 6 |

| USask-wide open-ended questions | 2 | 3 |

| Instructor personalized questions | 0-4, per course | 0-4, per course Results not shown to academic leaders; educator only. |

| College, School, and/or Departmental Questions | No | Yes, if submitted by unit |

| Optional modular question sets available | No | Yes |

| Optional context-specific course/instructor type questions (lab, tutorial, seminar, etc.) | No | Yes |

| Who receives results | Individual educator only | Individual educator Academic leader (department head, dean, etc.) |

Due to licensing agreements, we cannot distribute our USask-wide survey questions beyond our institution. You will be able to view the questionnaire if you have a valid USask NSID in our Course Feedback PAWS channel.

Interpreting SLEQ Reports

Descriptive statistics included in SLEQ reports are the interpolated median, dispersion index, and percentage favourable for each closed-ended question. You'll also receive plaintext comments for every open-ended question. Though the mean is commonly used as a descriptive statistic for student feedback, the statistics we include will produce more valid and meaningful insights because of the types of data that SLEQ produces.

We understand that these statistics are not commonly used, so we have created a Canvas course for instructors to help them read and interpret their SLEQ report statistics. Please enroll yourself at the link below.

If for some reason you need access to reports that include the mean and/or comparators, please email sleq_help@usask.ca to request a different version of the report.

History of SLEQ development

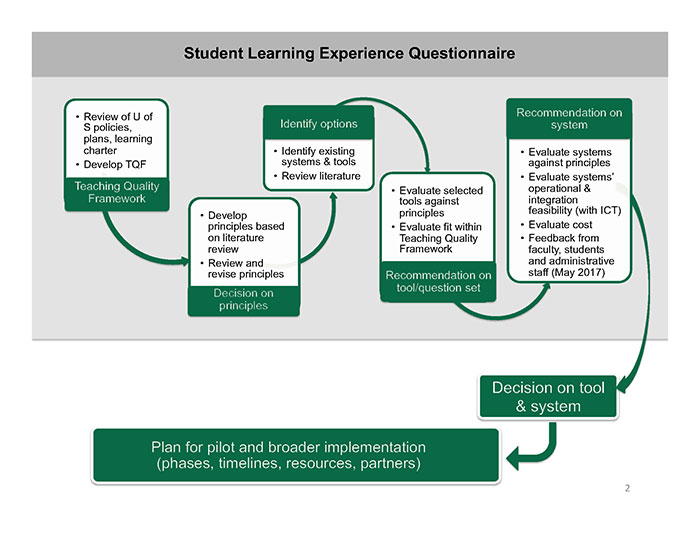

The chart below is an overview of the process that brought USask to our initial pilot of SLEQ. The timeline found below the chart includes the same details, but continues beyond the pilot up until the present.

Timeline Process By TLARC Working Group

and Summary Documents